使用RNN生成文本实战:莎士比亚风格诗句 (tensorflow2.0官方教程翻译)

本教程演示了如何使用基于字符的 RNN 生成文本。我们将使用 Andrej Karpathy 在 The Unreasonable Effectiveness of Recurrent Neural Networks 一文中提供的莎士比亚作品数据集。我们根据此数据(“Shakespear”)中的给定字符序列训练一个模型,让它预测序列的下一个字符(“e”)。通过重复调用该模型,可以生成更长的文本序列。

注意:启用 GPU 加速可提高执行速度。在 Colab 中依次选择“运行时”>“更改运行时类型”>“硬件加速器”>“GPU”。如果在本地运行,请确保 TensorFlow 的版本为 1.11.0 或更高版本。

本教程中包含使用 tf.keras 和 Eager Execution 实现的可运行代码。以下是本教程中的模型训练了30个周期时的示例输出,并以字符串“Q”开头:

QUEENE: I had thought thou hadst a Roman; for the oracle, Thus by All bids the man against the word, Which are so weak of care, by old care done; Your children were in your holy love, And the precipitation through the bleeding throne.

BISHOP OF ELY: Marry, and will, my lord, to weep in such a one were prettiest; Yet now I was adopted heir Of the world's lamentable day, To watch the next way with his father with his face?

ESCALUS: The cause why then we are all resolved more sons.

VOLUMNIA: O, no, no, no, no, no, no, no, no, no, no, no, no, no, no, no, no, no, no, no, no, it is no sin it should be dead, And love and pale as any will to that word.

QUEEN ELIZABETH: But how long have I heard the soul for this world, And show his hands of life be proved to stand.

PETRUCHIO: I say he look'd on, if I must be content To stay him from the fatal of our country's bliss. His lordship pluck'd from this sentence then for prey, And then let us twain, being the moon, were she such a case as fills m

虽然有些句子合乎语法规则,但大多数句子都没有意义。该模型尚未学习单词的含义,但请考虑以下几点:

- 该模型是基于字符的模型。在训练之初,该模型都不知道如何拼写英语单词,甚至不知道单词是一种文本单位。

- 输出的文本结构仿照了剧本的结构:文本块通常以讲话者的名字开头,并且像数据集中一样,这些名字全部采用大写字母。

- 如下文所示,尽管该模型只使用小批次的文本(每批文本包含 100 个字符)训练而成,但它仍然能够生成具有连贯结构的更长文本序列。

1. 设置Setup

1.1. 导入 TensorFlow 和其他库

x1from __future__ import absolute_import, division, print_function, unicode_literals23# !pip install tensorflow-gpu==2.0.0-alpha04import tensorflow as tf56import numpy as np7import os8import timexxxxxxxxxx21Collecting tensorflow-gpu==2.0.0-alpha02Successfully installed google-pasta-0.1.4 tb-nightly-1.14.0a20190303 tensorflow-estimator-2.0-preview-1.14.0.dev2019030300 tensorflow-gpu==2.0.0-alpha0-2.0.0.dev20190303

1.2. 下载莎士比亚数据集

通过更改以下行可使用您自己的数据运行此代码。

xxxxxxxxxx11path_to_file = tf.keras.utils.get_file('shakespeare.txt', 'https://storage.googleapis.com/download.tensorflow.org/data/shakespeare.txt')1.3. 读取数据

首先,我们来看一下文本内容。

xxxxxxxxxx41# Read, then decode for py2 compat.2text = open(path_to_file, 'rb').read().decode(encoding='utf-8')3# length of text is the number of characters in it4print ('Length of text: {} characters'.format(len(text)))xxxxxxxxxx11Length of text: 1115394 characters

xxxxxxxxxx21# Take a look at the first 250 characters in text2print(text[:250])xxxxxxxxxx141First Citizen:2Before we proceed any further, hear me speak.34All:5Speak, speak.67First Citizen:8You are all resolved rather to die than to famish?910All:11Resolved. resolved.1213First Citizen:14First, you know Caius Marcius is chief enemy to the people.

xxxxxxxxxx31# The unique characters in the file2vocab = sorted(set(text))3print ('{} unique characters'.format(len(vocab)))xxxxxxxxxx1165 unique characters

2. 处理文本

2.1. 向量化文本

在训练之前,我们需要将字符串映射到数字表示值。创建两个对照表:一个用于将字符映射到数字,另一个用于将数字映射到字符。

xxxxxxxxxx51# Creating a mapping from unique characters to indices2char2idx = {u:i for i, u in enumerate(vocab)}3idx2char = np.array(vocab)45text_as_int = np.array([char2idx[c] for c in text])现在,每个字符都有一个对应的整数表示值。请注意,我们按从 0 到 len(unique) 的索引映射字符。

xxxxxxxxxx41print('{')2for char,_ in zip(char2idx, range(20)):3 print(' {:4s}: {:3d},'.format(repr(char), char2idx[char]))4print(' ...\n}')xxxxxxxxxx91{2'\n': 0,3' ' : 1,4'!' : 2,5...6'F' : 18,7'G' : 19,8...9}

xxxxxxxxxx21# Show how the first 13 characters from the text are mapped to integers2print ('{} ---- characters mapped to int ---- > {}'.format(repr(text[:13]), text_as_int[:13]))

xxxxxxxxxx11'First Citizen' ---- characters mapped to int ---- > [18 47 56 57 58 1 15 47 58 47 64 43 52]

2.2. 预测任务

根据给定的字符或字符序列预测下一个字符最有可能是什么?这是我们要训练模型去执行的任务。模型的输入将是字符序列,而我们要训练模型去预测输出,即每一个时间步的下一个字符。

由于 RNN 会依赖之前看到的元素来维持内部状态,那么根据目前为止已计算过的所有字符,下一个字符是什么?

2.3. 创建训练样本和目标

将文本划分为训练样本和训练目标。每个训练样本都包含从文本中选取的 seq_length 个字符。

相应的目标也包含相同长度的文本,但是将所选的字符序列向右顺移一个字符。

将文本拆分成文本块,每个块的长度为 seq_length+1 个字符。例如,假设 seq_length 为 4,我们的文本为“Hello”,则可以将“Hell”创建为训练样本,将“ello”创建为目标。

为此,首先使用tf.data.Dataset.from_tensor_slices函数将文本向量转换为字符索引流。

xxxxxxxxxx91# The maximum length sentence we want for a single input in characters2seq_length = 1003examples_per_epoch = len(text)//seq_length45# Create training examples / targets6char_dataset = tf.data.Dataset.from_tensor_slices(text_as_int)78for i in char_dataset.take(5):9 print(idx2char[i.numpy()])xxxxxxxxxx51F2i3r4s5t

批处理方法可以让我们轻松地将这些单个字符转换为所需大小的序列。

xxxxxxxxxx41sequences = char_dataset.batch(seq_length+1, drop_remainder=True)23for item in sequences.take(5):4 print(repr(''.join(idx2char[item.numpy()])))xxxxxxxxxx51'First Citizen:\nBefore we proceed any further, hear me speak.\n\nAll:\nSpeak, speak.\n\nFirst Citizen:\nYou '2'are all resolved rather to die than to famish?\n\nAll:\nResolved. resolved.\n\nFirst Citizen:\nFirst, you k'3"now Caius Marcius is chief enemy to the people.\n\nAll:\nWe know't, we know't.\n\nFirst Citizen:\nLet us ki"4"ll him, and we'll have corn at our own price.\nIs't a verdict?\n\nAll:\nNo more talking on't; let it be d"5'one: away, away!\n\nSecond Citizen:\nOne word, good citizens.\n\nFirst Citizen:\nWe are accounted poor citi'

对于每个序列,复制并移动它以创建输入文本和目标文本,方法是使用 map 方法将简单函数应用于每个批处理:

xxxxxxxxxx61def split_input_target(chunk):2 input_text = chunk[:-1]3 target_text = chunk[1:]4 return input_text, target_text56dataset = sequences.map(split_input_target)打印第一个样本输入和目标值:

xxxxxxxxxx31for input_example, target_example in dataset.take(1):2 print ('Input data: ', repr(''.join(idx2char[input_example.numpy()])))3 print ('Target data:', repr(''.join(idx2char[target_example.numpy()])))xxxxxxxxxx21Input data: 'First Citizen:\nBefore we proceed any further, hear me speak.\n\nAll:\nSpeak, speak.\n\nFirst Citizen:\nYou'2Target data: 'irst Citizen:\nBefore we proceed any further, hear me speak.\n\nAll:\nSpeak, speak.\n\nFirst Citizen:\nYou '

这些向量的每个索引均作为一个时间步来处理。对于时间步 0 的输入,我们收到了映射到字符 “F” 的索引,并尝试预测 “i” 的索引作为下一个字符。在下一个时间步,执行相同的操作,但除了当前字符外,RNN 还要考虑上一步的信息。

xxxxxxxxxx41for i, (input_idx, target_idx) in enumerate(zip(input_example[:5], target_example[:5])):2 print("Step {:4d}".format(i))3 print(" input: {} ({:s})".format(input_idx, repr(idx2char[input_idx])))4 print(" expected output: {} ({:s})".format(target_idx, repr(idx2char[target_idx])))xxxxxxxxxx71Step 02input: 18 ('F')3expected output: 47 ('i')4...5Step 46input: 58 ('t')7expected output: 1 (' ')

2.4. 使用 tf.data 创建批次文本并重排这些批次

我们使用 tf.data 将文本拆分为可管理的序列。但在将这些数据馈送到模型中之前,我们需要对数据进行重排,并将其打包成批。

xxxxxxxxxx121# Batch size2BATCH_SIZE = 6434# Buffer size to shuffle the dataset5# (TF data is designed to work with possibly infinite sequences,6# so it doesn't attempt to shuffle the entire sequence in memory. Instead,7# it maintains a buffer in which it shuffles elements).8BUFFER_SIZE = 10000910dataset = dataset.shuffle(BUFFER_SIZE).batch(BATCH_SIZE, drop_remainder=True)1112dataset

xxxxxxxxxx11<BatchDataset shapes: ((64, 100), (64, 100)), types: (tf.int64, tf.int64)>

3. 实现模型

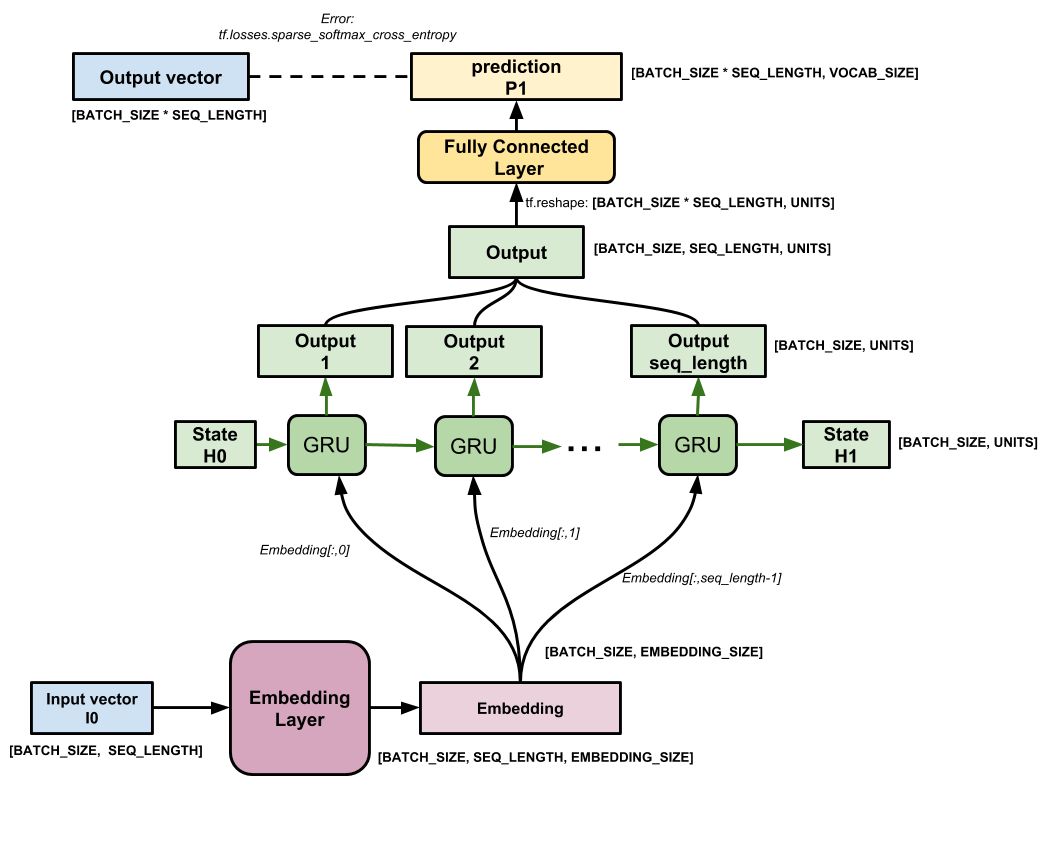

使用tf.keras.Sequential来定义模型。对于这个简单的例子,我们可以使用三个层来定义模型:

tf.keras.layers.Embedding:嵌入层(输入层)。一个可训练的对照表,它会将每个字符的数字映射到具有embedding_dim个维度的高维度向量;tf.keras.layers.GRU: GRU 层:一种层大小等于单位数(units = rnn_units)的 RNN。(在此示例中,您也可以使用 LSTM 层。)tf.keras.layers.Dense:密集层(输出层),带有vocab_size个单元输出。

xxxxxxxxxx81# Length of the vocabulary in chars2vocab_size = len(vocab)34# The embedding dimension5embedding_dim = 25667# Number of RNN units8rnn_units = 1024xxxxxxxxxx111def build_model(vocab_size, embedding_dim, rnn_units, batch_size):2 model = tf.keras.Sequential([3 tf.keras.layers.Embedding(vocab_size, embedding_dim,4 batch_input_shape=[batch_size, None]),5 tf.keras.layers.LSTM(rnn_units,6 return_sequences=True,7 stateful=True,8 recurrent_initializer='glorot_uniform'),9 tf.keras.layers.Dense(vocab_size)10 ])11 return modelxxxxxxxxxx51model = build_model(2 vocab_size = len(vocab),3 embedding_dim=embedding_dim,4 rnn_units=rnn_units,5 batch_size=BATCH_SIZE)对于每个字符,模型查找嵌入,以嵌入作为输入一次运行GRU,并应用密集层生成预测下一个字符的对数可能性的logits:

4. 试试这个模型

现在运行模型以查看它的行为符合预期,首先检查输出的形状:

xxxxxxxxxx31for input_example_batch, target_example_batch in dataset.take(1):2 example_batch_predictions = model(input_example_batch)3 print(example_batch_predictions.shape, "# (batch_size, sequence_length, vocab_size)")xxxxxxxxxx11(64, 100, 65) # (batch_size, sequence_length, vocab_size)

在上面的示例中,输入的序列长度为 100 ,但模型可以在任何长度的输入上运行:

xxxxxxxxxx11model.summary()xxxxxxxxxx141Model: "sequential"2_________________________________________________________________3Layer (type) Output Shape Param #4=================================================================5embedding (Embedding) (64, None, 256) 166406_________________________________________________________________7unified_lstm (UnifiedLSTM) (64, None, 1024) 52469768_________________________________________________________________9dense (Dense) (64, None, 65) 6662510=================================================================11Total params: 5,330,24112Trainable params: 5,330,24113Non-trainable params: 014_________________________________________________________________

为了从模型中获得实际预测,我们需要从输出分布中进行采样,以获得实际的字符索引。此分布由字符词汇表上的logits定义。

注意:从这个分布中进行sample(采样)非常重要,因为获取分布的argmax可以轻松地将模型卡在循环中。

尝试批处理中的第一个样本:

xxxxxxxxxx21sampled_indices = tf.random.categorical(example_batch_predictions[0], num_samples=1)2sampled_indices = tf.squeeze(sampled_indices,axis=-1).numpy()这使我们在每个时间步都预测下一个字符索引:

xxxxxxxxxx11sampled_indicesxxxxxxxxxx61array([21, 2, 58, 40, 42, 32, 39, 7, 18, 38, 30, 58, 23, 58, 37, 10, 23,216, 52, 14, 43, 8, 32, 49, 62, 41, 53, 38, 17, 36, 24, 59, 41, 38,34, 27, 33, 59, 54, 34, 14, 1, 1, 56, 55, 40, 37, 4, 32, 44, 62,459, 1, 10, 20, 29, 2, 48, 37, 26, 10, 22, 58, 5, 26, 9, 23, 26,554, 43, 46, 36, 62, 57, 8, 53, 52, 23, 57, 42, 60, 10, 43, 11, 45,612, 28, 46, 46, 15, 51, 9, 56, 7, 53, 51, 2, 1, 10, 58])

解码这些以查看此未经训练的模型预测的文本:

xxxxxxxxxx31print("Input: \n", repr("".join(idx2char[input_example_batch[0]])))2print()3print("Next Char Predictions: \n", repr("".join(idx2char[sampled_indices ])))xxxxxxxxxx51Input:2'to it far before thy time?\nWarwick is chancellor and the lord of Calais;\nStern Falconbridge commands'34Next Char Predictions:5"I!tbdTa-FZRtKtY:KDnBe.TkxcoZEXLucZ&OUupVB rqbY&Tfxu :HQ!jYN:Jt'N3KNpehXxs.onKsdv:e;g?PhhCm3r-om! :t"

5. 训练模型

此时,问题可以被视为标准分类问题。给定先前的RNN状态,以及此时间步的输入,预测下一个字符的类。

5.1. 添加优化器和损失函数

标准的tf.keras.losses.sparse_softmax_crossentropy损失函数在这种情况下有效,因为它应用于预测的最后一个维度。

因为我们的模型返回logits,所以我们需要设置from_logits标志。

xxxxxxxxxx61def loss(labels, logits):2 return tf.keras.losses.sparse_categorical_crossentropy(labels, logits, from_logits=True)34example_batch_loss = loss(target_example_batch, example_batch_predictions)5print("Prediction shape: ", example_batch_predictions.shape, " # (batch_size, sequence_length, vocab_size)")6print("scalar_loss: ", example_batch_loss.numpy().mean())xxxxxxxxxx21Prediction shape: (64, 100, 65) # (batch_size, sequence_length, vocab_size)2scalar_loss: 4.174188

使用 tf.keras.Model.compile 方法配置培训过程。我们将使用带有默认参数和损失函数的 tf.keras.optimizers.Adam。

xxxxxxxxxx11model.compile(optimizer='adam', loss=loss)5.2. 配置检查点

使用tf.keras.callbacks.ModelCheckpoint确保在训练期间保存检查点:

xxxxxxxxxx81# Directory where the checkpoints will be saved2checkpoint_dir = './training_checkpoints'3# Name of the checkpoint files4checkpoint_prefix = os.path.join(checkpoint_dir, "ckpt_{epoch}")56checkpoint_callback=tf.keras.callbacks.ModelCheckpoint(7 filepath=checkpoint_prefix,8 save_weights_only=True)5.3. 开始训练

为了使训练时间合理,使用10个时期来训练模型。在Colab中,将运行时设置为GPU以便更快地进行训练。

xxxxxxxxxx31EPOCHS=1023history = model.fit(dataset, epochs=EPOCHS, callbacks=[checkpoint_callback])xxxxxxxxxx51Epoch 1/102172/172 [==============================] - 31s 183ms/step - loss: 2.70523......4Epoch 10/105172/172 [==============================] - 31s 180ms/step - loss: 1.2276

6. 生成文本

6.1. 加载最新的检查点

要使此预测步骤简单,请使用批处理大小1。

由于RNN状态从时间步长传递到时间步的方式,模型一旦构建就只接受固定大小的批次数据。

要使用不同的 batch_size 运行模型,我们需要重建模型并从检查点恢复权重。

xxxxxxxxxx11tf.train.latest_checkpoint(checkpoint_dir)xxxxxxxxxx11'./training_checkpoints/ckpt_10'

xxxxxxxxxx71model = build_model(vocab_size, embedding_dim, rnn_units, batch_size=1)23model.load_weights(tf.train.latest_checkpoint(checkpoint_dir))45model.build(tf.TensorShape([1, None]))67model.summary()xxxxxxxxxx141Model: "sequential_1"2_________________________________________________________________3Layer (type) Output Shape Param #4=================================================================5embedding_1 (Embedding) (1, None, 256) 166406_________________________________________________________________7unified_lstm_1 (UnifiedLSTM) (1, None, 1024) 52469768_________________________________________________________________9dense_1 (Dense) (1, None, 65) 6662510=================================================================11Total params: 5,330,24112Trainable params: 5,330,24113Non-trainable params: 014_________________________________________________________________

6.2. 预测循环

下面的代码块可生成文本:

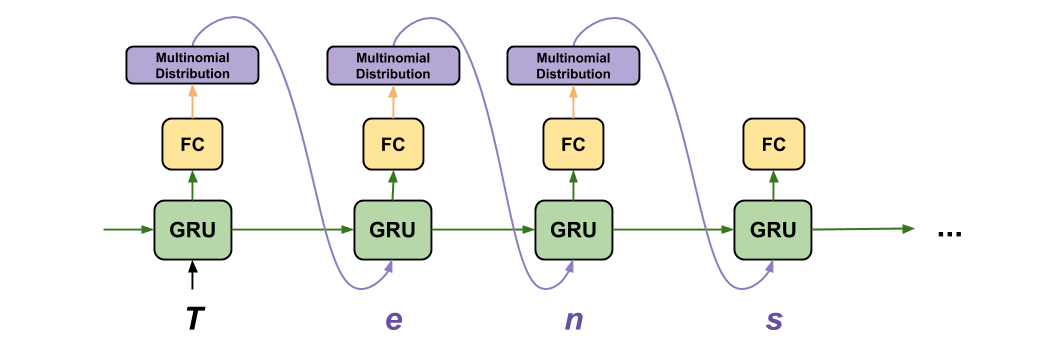

- 首先选择一个起始字符串,初始化 RNN 状态,并设置要生成的字符数。

- 使用起始字符串和 RNN 状态获取预测值。

- 然后,使用多项分布计算预测字符的索引。 将此预测字符用作模型的下一个输入。

- 模型返回的 RNN 状态被馈送回模型中,使模型现在拥有更多上下文,而不是仅有一个单词。在模型预测下一个单词之后,经过修改的 RNN 状态再次被馈送回模型中,模型从先前预测的单词获取更多上下文,从而通过这种方式进行学习。

查看生成的文本后,您会发现模型知道何时应使用大写字母,以及如何构成段落和模仿莎士比亚风格的词汇。由于执行的训练周期较少,因此该模型尚未学会生成连贯的句子。

xxxxxxxxxx361def generate_text(model, start_string):2 # Evaluation step (generating text using the learned model)34 # Number of characters to generate5 num_generate = 100067 # Converting our start string to numbers (vectorizing)8 input_eval = [char2idx[s] for s in start_string]9 input_eval = tf.expand_dims(input_eval, 0)1011 # Empty string to store our results12 text_generated = []1314 # Low temperatures results in more predictable text.15 # Higher temperatures results in more surprising text.16 # Experiment to find the best setting.17 temperature = 1.01819 # Here batch size == 120 model.reset_states()21 for i in range(num_generate):22 predictions = model(input_eval)23 # remove the batch dimension24 predictions = tf.squeeze(predictions, 0)2526 # using a categorical distribution to predict the word returned by the model27 predictions = predictions / temperature28 predicted_id = tf.random.categorical(predictions, num_samples=1)[-1,0].numpy()2930 # We pass the predicted word as the next input to the model31 # along with the previous hidden state32 input_eval = tf.expand_dims([predicted_id], 0)3334 text_generated.append(idx2char[predicted_id])3536 return (start_string + ''.join(text_generated))xxxxxxxxxx11print(generate_text(model, start_string=u"ROMEO: "))xxxxxxxxxx291ROMEO: now to have weth hearten sonce,2No more than the thing stand perfect your self,3Love way come. Up, this is d so do in friends:4If I fear e this, I poisple5My gracious lusty, born once for readyus disguised:6But that a pry; do it sure, thou wert love his cause;7My mind is come too!89POMPEY:10Serve my master's him: he hath extreme over his hand in the11where they shall not hear they right for me.1213PROSSPOLUCETER:14I pray you, mistress, I shall be construted15With one that you shall that we know it, in this gentleasing earls of daiberkers now16he is to look upon this face, which leadens from his master as17you should not put what you perciploce backzat of cast,18Nor fear it sometime but for a pit19a world of Hantua?2021First Gentleman:22That we can fall of bastards my sperial;23O, she Go seeming that which I have24what enby oar own best injuring them,25Or thom I do now, I, in heart is nothing gone,26Leatt the bark which was done born.2728BRUTUS:29Both Margaret, he is sword of the house person. If born,

如果要改进结果,最简单的方法是增加模型训练的时长(请尝试 EPOCHS=30)。

您还可以尝试使用不同的起始字符,或尝试添加另一个 RNN 层以提高模型的准确率,又或者调整温度参数以生成具有一定随机性的预测值。

7. 高级:自定义训练

上述训练程序很简单,但不会给你太多控制。

所以现在您已经了解了如何手动运行模型,让我们解压缩训练循环,并自己实现。例如,如果要实施课程学习以帮助稳定模型的开环输出,这就是一个起点。

我们将使用 tf.GradientTape 来跟踪梯度。您可以通过阅读eager execution guide来了解有关此方法的更多信息。

该程序的工作原理如下:

- 首先,初始化 RNN 状态。 我们通过调用

tf.keras.Model.reset_states方法来完成此操作。 - 接下来,迭代数据集(逐批)并计算与每个数据集关联的预测。

- 打开

tf.GradientTape,计算该上下文中的预测和损失。 - 使用

tf.GradientTape.grads方法计算相对于模型变量的损失梯度。 - 最后,使用优化器的

tf.train.Optimizer.apply_gradients方法向下迈出一步。

xxxxxxxxxx81model = build_model(2 vocab_size = len(vocab),3 embedding_dim=embedding_dim,4 rnn_units=rnn_units,5 batch_size=BATCH_SIZE)678optimizer = tf.keras.optimizers.Adam()xxxxxxxxxx111.function2def train_step(inp, target):3 with tf.GradientTape() as tape:4 predictions = model(inp)5 loss = tf.reduce_mean(6 tf.keras.losses.sparse_categorical_crossentropy(7 target, predictions, from_logits=True))8 grads = tape.gradient(loss, model.trainable_variables)9 optimizer.apply_gradients(zip(grads, model.trainable_variables))1011 return lossxxxxxxxxxx251# Training step2EPOCHS = 1034for epoch in range(EPOCHS):5 start = time.time()67 # initializing the hidden state at the start of every epoch8 # initally hidden is None9 hidden = model.reset_states()1011 for (batch_n, (inp, target)) in enumerate(dataset):12 loss = train_step(inp, target)1314 if batch_n % 100 == 0:15 template = 'Epoch {} Batch {} Loss {}'16 print(template.format(epoch+1, batch_n, loss))1718 # saving (checkpoint) the model every 5 epochs19 if (epoch + 1) % 5 == 0:20 model.save_weights(checkpoint_prefix.format(epoch=epoch))2122 print ('Epoch {} Loss {:.4f}'.format(epoch+1, loss))23 print ('Time taken for 1 epoch {} sec\n'.format(time.time() - start))2425model.save_weights(checkpoint_prefix.format(epoch=epoch))xxxxxxxxxx51.....2Epoch 10 Batch 0 Loss 1.23504781723022463Epoch 10 Batch 100 Loss 1.16106748580932624Epoch 10 Loss 1.15585Time taken for 1 epoch 14.261839628219604 sec

最新版本:https://www.mashangxue123.com/tensorflow/tf2-tutorials-text-text_generation.html 英文版本:https://tensorflow.google.cn/beta/tutorials/text/text_generation 翻译建议PR:https://github.com/mashangxue/tensorflow2-zh/edit/master/r2/tutorials/text/text_generation.md