采用注意力机制的神经机器翻译(tensorflow2.0官方教程翻译)

本教程训练一个序列到序列 (seq2seq)模型,实现西班牙语到英语的翻译。这是一个高级示例,要求您对序列到序列模型有一定的了解。

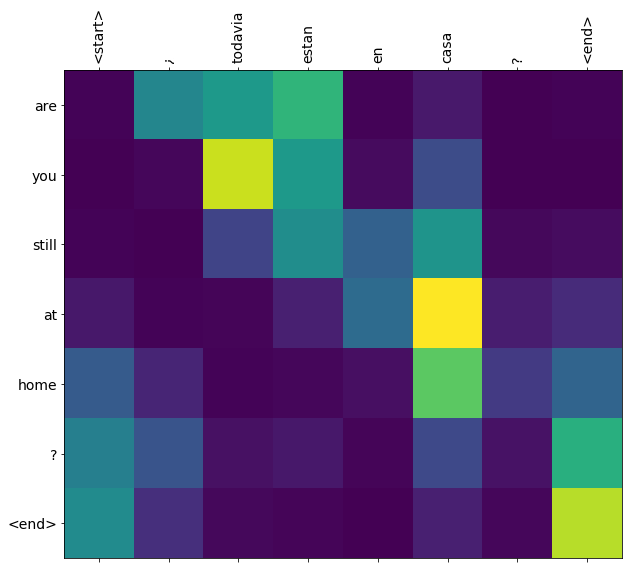

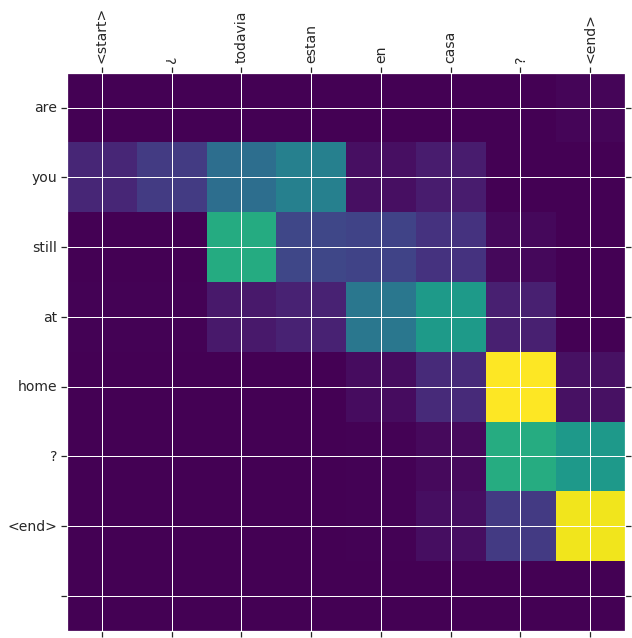

训练模型后,输入一个西班牙语句子将返回对应英文翻译,例如 "¿todavia estan en casa?" ,返回 "are you still at home?"

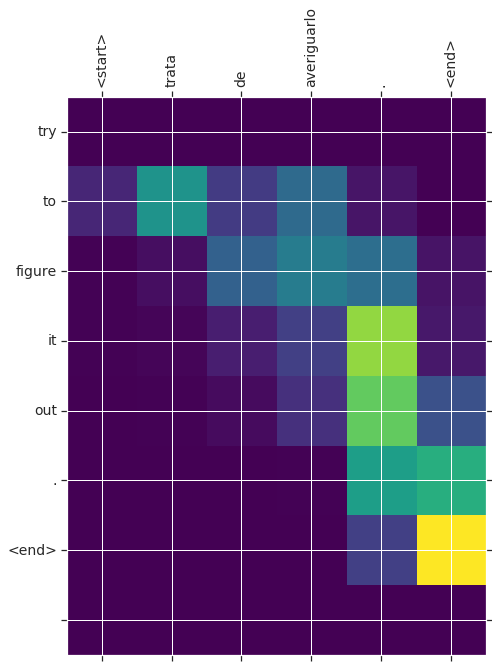

对于一个玩具例子来说,翻译质量是合理的,但是生成的注意情节可能更有趣。这说明在翻译过程中,模型注意到了输入句子的哪些部分:

注意:此示例在单个P100 GPU上运行大约需要10分钟。

x1from __future__ import absolute_import, division, print_function, unicode_literals23import tensorflow as tf45import matplotlib.pyplot as plt6from sklearn.model_selection import train_test_split78import unicodedata9import re10import numpy as np11import os12import io13import time1. 下载并准备数据集

我们将使用 http://www.manythings.org/anki/ 提供的语言数据集。此数据集包含以下格式的语言翻译对:

xxxxxxxxxx11May I borrow this book? ¿Puedo tomar prestado este libro?

有多种语言可供选择,但我们将使用英语 - 西班牙语数据集。为方便起见,我们在Google Cloud上托管了此数据集的副本,但您也可以下载自己的副本。下载数据集后,以下是我们准备数据的步骤:

- 为每个句子添加开始和结束标记。

- 删除特殊字符来清除句子。

- 创建一个单词索引和反向单词索引(从单词→id和id→单词映射的字典)。

- 将每个句子填充到最大长度。

xxxxxxxxxx61# Download the file2path_to_zip = tf.keras.utils.get_file(3 'spa-eng.zip', origin='http://storage.googleapis.com/download.tensorflow.org/data/spa-eng.zip',4 extract=True)56path_to_file = os.path.dirname(path_to_zip)+"/spa-eng/spa.txt"xxxxxxxxxx31 Downloading data from http://storage.googleapis.com/download.tensorflow.org/data/spa-eng.zip2 2646016/2638744 [==============================] - 0s 0us/step3 2654208/2638744 [==============================] - 0s 0us/stepxxxxxxxxxx241# Converts the unicode file to ascii2def unicode_to_ascii(s):3 return ''.join(c for c in unicodedata.normalize('NFD', s)4 if unicodedata.category(c) != 'Mn')567def preprocess_sentence(w):8 w = unicode_to_ascii(w.lower().strip())910 # creating a space between a word and the punctuation following it11 # eg: "he is a boy." => "he is a boy ."12 # Reference:- https://stackoverflow.com/questions/3645931/python-padding-punctuation-with-white-spaces-keeping-punctuation13 w = re.sub(r"([?.!,¿])", r" \1 ", w)14 w = re.sub(r'[" "]+', " ", w)1516 # replacing everything with space except (a-z, A-Z, ".", "?", "!", ",")17 w = re.sub(r"[^a-zA-Z?.!,¿]+", " ", w)1819 w = w.rstrip().strip()2021 # adding a start and an end token to the sentence22 # so that the model know when to start and stop predicting.23 w = '<start> ' + w + ' <end>'24 return wxxxxxxxxxx41en_sentence = u"May I borrow this book?"2sp_sentence = u"¿Puedo tomar prestado este libro?"3print(preprocess_sentence(en_sentence))4print(preprocess_sentence(sp_sentence).encode('utf-8'))xxxxxxxxxx21 <start> may i borrow this book ? <end>2 <start> ¿ puedo tomar prestado este libro ? <end>xxxxxxxxxx91# 1. Remove the accents2# 2. Clean the sentences3# 3. Return word pairs in the format: [ENGLISH, SPANISH]4def create_dataset(path, num_examples):5 lines = io.open(path, encoding='UTF-8').read().strip().split('\n')67 word_pairs = [[preprocess_sentence(w) for w in l.split('\t')] for l in lines[:num_examples]]89 return zip(*word_pairs)xxxxxxxxxx31en, sp = create_dataset(path_to_file, None)2print(en[-1])3print(sp[-1])xxxxxxxxxx21 <start> if you want to sound like a native speaker , you must be willing to practice saying the same sentence over and over in the same way that banjo players practice the same phrase over and over until they can play it correctly and at the desired tempo . <end>2 <start> si quieres sonar como un hablante nativo , debes estar dispuesto a practicar diciendo la misma frase una y otra vez de la misma manera en que un musico de banjo practica el mismo fraseo una y otra vez hasta que lo puedan tocar correctamente y en el tiempo esperado . <end>xxxxxxxxxx21def max_length(tensor):2 return max(len(t) for t in tensor)xxxxxxxxxx111def tokenize(lang):2 lang_tokenizer = tf.keras.preprocessing.text.Tokenizer(3 filters='')4 lang_tokenizer.fit_on_texts(lang)56 tensor = lang_tokenizer.texts_to_sequences(lang)78 tensor = tf.keras.preprocessing.sequence.pad_sequences(tensor,9 padding='post')1011 return tensor, lang_tokenizerxxxxxxxxxx81def load_dataset(path, num_examples=None):2 # creating cleaned input, output pairs3 targ_lang, inp_lang = create_dataset(path, num_examples)45 input_tensor, inp_lang_tokenizer = tokenize(inp_lang)6 target_tensor, targ_lang_tokenizer = tokenize(targ_lang)78 return input_tensor, target_tensor, inp_lang_tokenizer, targ_lang_tokenizer1.1. 限制数据集的大小以更快地进行实验(可选)

对 > 100,000个句子的完整数据集进行训练需要很长时间。为了更快地训练,我们可以将数据集的大小限制为30,000个句子(当然,翻译质量会随着数据的减少而降低):

xxxxxxxxxx61# Try experimenting with the size of that dataset2num_examples = 300003input_tensor, target_tensor, inp_lang, targ_lang = load_dataset(path_to_file, num_examples)45# Calculate max_length of the target tensors6max_length_targ, max_length_inp = max_length(target_tensor), max_length(input_tensor)xxxxxxxxxx51# Creating training and validation sets using an 80-20 split2input_tensor_train, input_tensor_val, target_tensor_train, target_tensor_val = train_test_split(input_tensor, target_tensor, test_size=0.2)34# Show length5len(input_tensor_train), len(target_tensor_train), len(input_tensor_val), len(target_tensor_val)xxxxxxxxxx11 (24000, 24000, 6000, 6000)

xxxxxxxxxx41def convert(lang, tensor):2 for t in tensor:3 if t!=0:4 print ("%d ----> %s" % (t, lang.index_word[t]))xxxxxxxxxx51print ("Input Language; index to word mapping")2convert(inp_lang, input_tensor_train[0])3print ()4print ("Target Language; index to word mapping")5convert(targ_lang, target_tensor_train[0])xxxxxxxxxx191 Input Language; index to word mapping2 1 ----> <start>3 8 ----> no4 38 ----> puedo5 804 ----> confiar6 20 ----> en7 1000 ----> vosotras8 3 ----> .9 2 ----> <end>10 11 Target Language; index to word mapping12 1 ----> <start>13 4 ----> i14 25 ----> can15 12 ----> t16 345 ----> trust17 6 ----> you18 3 ----> .19 2 ----> <end>1.2. 创建 tf.data 数据集

xxxxxxxxxx101BUFFER_SIZE = len(input_tensor_train)2BATCH_SIZE = 643steps_per_epoch = len(input_tensor_train)//BATCH_SIZE4embedding_dim = 2565units = 10246vocab_inp_size = len(inp_lang.word_index)+17vocab_tar_size = len(targ_lang.word_index)+189dataset = tf.data.Dataset.from_tensor_slices((input_tensor_train, target_tensor_train)).shuffle(BUFFER_SIZE)10dataset = dataset.batch(BATCH_SIZE, drop_remainder=True)xxxxxxxxxx21example_input_batch, example_target_batch = next(iter(dataset))2example_input_batch.shape, example_target_batch.shapexxxxxxxxxx11 (TensorShape([64, 16]), TensorShape([64, 11]))2. 编写编码器和解码器模型

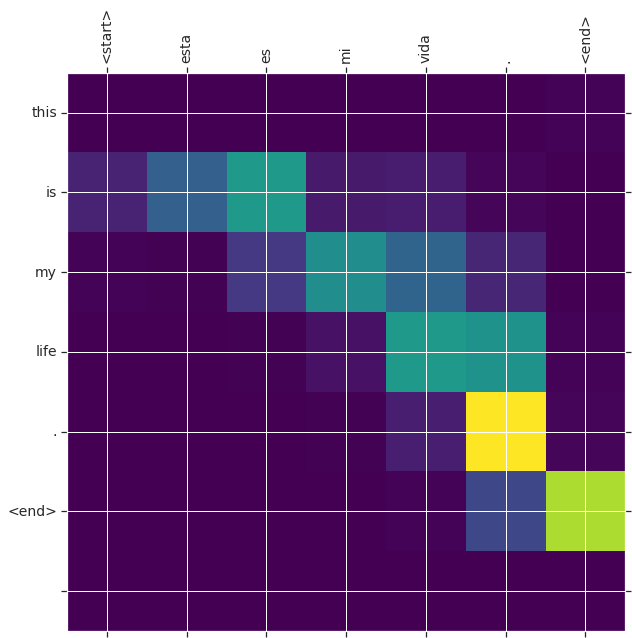

我们将实现一个使用注意力机制的编码器-解码器模型,您可以在TensorFlow 神经机器翻译(seq2seq)教程中阅读。此示例使用更新的API集,实现了seq2seq教程中的注意方程式。下图显示了每个输入单词由注意机制分配权重,然后解码器使用该权重来预测句子中的下一个单词。

通过编码器模型输入,该模型给出了形状 (batch_size, max_length, hidden_size) 的编码器输出和形状 (batch_size, hidden_size) 的编码器隐藏状态。

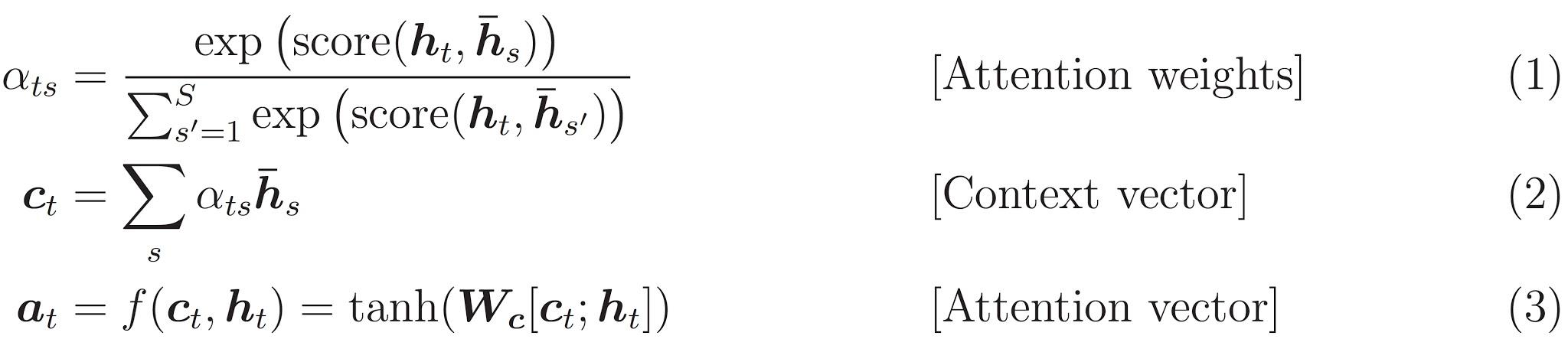

下面是实现的方程:

我们用的是 Bahdanau attention 。在写出简化形式之前,我们先来定义符号:

- FC = Fully connected (dense) layer 完全连接(密集)层

- EO = Encoder output 编码器输出

- H = hidden state 隐藏的状态

- X = input to the decoder 输入到解码器

定义伪代码:

score = FC(tanh(FC(EO) + FC(H)))attention weights = softmax(score, axis = 1). 默认情况下Softmax应用于最后一个轴,但是我们要在 第一轴 上应用它,因为得分的形状是 (batch_size, max_length, hidden_size) 。Max_length是我们输入的长度。由于我们尝试为每个输入分配权重,因此应在该轴上应用softmax。context vector = sum(attention weights * EO, axis = 1). 选择轴为1的原因与上述相同。embedding output= 译码器X的输入通过嵌入层传递merged vector = concat(embedding output, context vector)- 将该合并的矢量提供给GRU

每个步骤中所有向量的形状都已在代码中的注释中指定:

xxxxxxxxxx181class Encoder(tf.keras.Model):2 def __init__(self, vocab_size, embedding_dim, enc_units, batch_sz):3 super(Encoder, self).__init__()4 self.batch_sz = batch_sz5 self.enc_units = enc_units6 self.embedding = tf.keras.layers.Embedding(vocab_size, embedding_dim)7 self.gru = tf.keras.layers.GRU(self.enc_units,8 return_sequences=True,9 return_state=True,10 recurrent_initializer='glorot_uniform')1112 def call(self, x, hidden):13 x = self.embedding(x)14 output, state = self.gru(x, initial_state = hidden)15 return output, state1617 def initialize_hidden_state(self):18 return tf.zeros((self.batch_sz, self.enc_units))xxxxxxxxxx71encoder = Encoder(vocab_inp_size, embedding_dim, units, BATCH_SIZE)23# sample input4sample_hidden = encoder.initialize_hidden_state()5sample_output, sample_hidden = encoder(example_input_batch, sample_hidden)6print ('Encoder output shape: (batch size, sequence length, units) {}'.format(sample_output.shape))7print ('Encoder Hidden state shape: (batch size, units) {}'.format(sample_hidden.shape))xxxxxxxxxx21 Encoder output shape: (batch size, sequence length, units) (64, 16, 1024)2 Encoder Hidden state shape: (batch size, units) (64, 1024)xxxxxxxxxx261class BahdanauAttention(tf.keras.Model):2 def __init__(self, units):3 super(BahdanauAttention, self).__init__()4 self.W1 = tf.keras.layers.Dense(units)5 self.W2 = tf.keras.layers.Dense(units)6 self.V = tf.keras.layers.Dense(1)78 def call(self, query, values):9 # hidden shape == (batch_size, hidden size)10 # hidden_with_time_axis shape == (batch_size, 1, hidden size)11 # we are doing this to perform addition to calculate the score12 hidden_with_time_axis = tf.expand_dims(query, 1)1314 # score shape == (batch_size, max_length, hidden_size)15 score = self.V(tf.nn.tanh(16 self.W1(values) + self.W2(hidden_with_time_axis)))1718 # attention_weights shape == (batch_size, max_length, 1)19 # we get 1 at the last axis because we are applying score to self.V20 attention_weights = tf.nn.softmax(score, axis=1)2122 # context_vector shape after sum == (batch_size, hidden_size)23 context_vector = attention_weights * values24 context_vector = tf.reduce_sum(context_vector, axis=1)2526 return context_vector, attention_weightsxxxxxxxxxx51attention_layer = BahdanauAttention(10)2attention_result, attention_weights = attention_layer(sample_hidden, sample_output)34print("Attention result shape: (batch size, units) {}".format(attention_result.shape))5print("Attention weights shape: (batch_size, sequence_length, 1) {}".format(attention_weights.shape))xxxxxxxxxx21 Attention result shape: (batch size, units) (64, 1024)2 Attention weights shape: (batch_size, sequence_length, 1) (64, 16, 1)xxxxxxxxxx351class Decoder(tf.keras.Model):2def __init__(self, vocab_size, embedding_dim, dec_units, batch_sz):3super(Decoder, self).__init__()4self.batch_sz = batch_sz5self.dec_units = dec_units6self.embedding = tf.keras.layers.Embedding(vocab_size, embedding_dim)7self.gru = tf.keras.layers.GRU(self.dec_units,8return_sequences=True,9return_state=True,10recurrent_initializer='glorot_uniform')11self.fc = tf.keras.layers.Dense(vocab_size)1213# used for attention14self.attention = BahdanauAttention(self.dec_units)1516def call(self, x, hidden, enc_output):17# enc_output shape == (batch_size, max_length, hidden_size)18context_vector, attention_weights = self.attention(hidden, enc_output)1920# x shape after passing through embedding == (batch_size, 1, embedding_dim)21x = self.embedding(x)2223# x shape after concatenation == (batch_size, 1, embedding_dim + hidden_size)24x = tf.concat([tf.expand_dims(context_vector, 1), x], axis=-1)2526# passing the concatenated vector to the GRU27output, state = self.gru(x)2829# output shape == (batch_size * 1, hidden_size)30output = tf.reshape(output, (-1, output.shape[2]))3132# output shape == (batch_size, vocab)33x = self.fc(output)3435return x, state, attention_weights

xxxxxxxxxx61decoder = Decoder(vocab_tar_size, embedding_dim, units, BATCH_SIZE)23sample_decoder_output, _, _ = decoder(tf.random.uniform((64, 1)),4 sample_hidden, sample_output)56print ('Decoder output shape: (batch_size, vocab size) {}'.format(sample_decoder_output.shape))xxxxxxxxxx11 Decoder output shape: (batch_size, vocab size) (64, 4935)3. 定义优化器和损失函数

xxxxxxxxxx121optimizer = tf.keras.optimizers.Adam()2loss_object = tf.keras.losses.SparseCategoricalCrossentropy(3 from_logits=True, reduction='none')45def loss_function(real, pred):6 mask = tf.math.logical_not(tf.math.equal(real, 0))7 loss_ = loss_object(real, pred)89 mask = tf.cast(mask, dtype=loss_.dtype)10 loss_ *= mask1112 return tf.reduce_mean(loss_)4. Checkpoints检查点(基于对象的保存)

xxxxxxxxxx51checkpoint_dir = './training_checkpoints'2checkpoint_prefix = os.path.join(checkpoint_dir, "ckpt")3checkpoint = tf.train.Checkpoint(optimizer=optimizer,4 encoder=encoder,5 decoder=decoder)5. 训练

- 通过编码器传递输入,编码器返回编码器输出和编码器隐藏状态。

- 编码器输出,编码器隐藏状态和解码器输入(它是开始标记)被传递给解码器。

- 解码器返回预测和解码器隐藏状态。

- 然后将解码器隐藏状态传递回模型,并使用预测来计算损失。

- 使用 teacher forcing 决定解码器的下一个输入。

- Teacher forcing 是将目标字作为下一个输入传递给解码器的技术。

- 最后一步是计算梯度并将其应用于优化器并反向传播。

xxxxxxxxxx301.function2def train_step(inp, targ, enc_hidden):3 loss = 045 with tf.GradientTape() as tape:6 enc_output, enc_hidden = encoder(inp, enc_hidden)78 dec_hidden = enc_hidden910 dec_input = tf.expand_dims([targ_lang.word_index['<start>']] * BATCH_SIZE, 1)1112 # Teacher forcing - feeding the target as the next input13 for t in range(1, targ.shape[1]):14 # passing enc_output to the decoder15 predictions, dec_hidden, _ = decoder(dec_input, dec_hidden, enc_output)1617 loss += loss_function(targ[:, t], predictions)1819 # using teacher forcing20 dec_input = tf.expand_dims(targ[:, t], 1)2122 batch_loss = (loss / int(targ.shape[1]))2324 variables = encoder.trainable_variables + decoder.trainable_variables2526 gradients = tape.gradient(loss, variables)2728 optimizer.apply_gradients(zip(gradients, variables))2930 return batch_lossxxxxxxxxxx231EPOCHS = 1023for epoch in range(EPOCHS):4 start = time.time()56 enc_hidden = encoder.initialize_hidden_state()7 total_loss = 089 for (batch, (inp, targ)) in enumerate(dataset.take(steps_per_epoch)):10 batch_loss = train_step(inp, targ, enc_hidden)11 total_loss += batch_loss1213 if batch % 100 == 0:14 print('Epoch {} Batch {} Loss {:.4f}'.format(epoch + 1,15 batch,16 batch_loss.numpy()))17 # saving (checkpoint) the model every 2 epochs18 if (epoch + 1) % 2 == 0:19 checkpoint.save(file_prefix = checkpoint_prefix)2021 print('Epoch {} Loss {:.4f}'.format(epoch + 1,22 total_loss / steps_per_epoch))23 print('Time taken for 1 epoch {} sec\n'.format(time.time() - start))xxxxxxxxxx71 ...... 2 Epoch 10 Batch 0 Loss 0.12193 Epoch 10 Batch 100 Loss 0.13744 Epoch 10 Batch 200 Loss 0.10845 Epoch 10 Batch 300 Loss 0.09946 Epoch 10 Loss 0.10887 Time taken for 1 epoch 29.2324090004 sec6. 翻译

- 评估函数类似于训练循环,除了我们在这里不使用 teacher forcing 。解码器在每个时间步长的输入是其先前的预测,以及隐藏状态和编码器的输出。

- 停止预测模型何时预测结束标记。

- 并存储每个时间步的注意力。

注意:编码器输出仅针对一个输入计算一次。

xxxxxxxxxx391def evaluate(sentence):2 attention_plot = np.zeros((max_length_targ, max_length_inp))34 sentence = preprocess_sentence(sentence)56 inputs = [inp_lang.word_index[i] for i in sentence.split(' ')]7 inputs = tf.keras.preprocessing.sequence.pad_sequences([inputs],8 maxlen=max_length_inp,9 padding='post')10 inputs = tf.convert_to_tensor(inputs)1112 result = ''1314 hidden = [tf.zeros((1, units))]15 enc_out, enc_hidden = encoder(inputs, hidden)1617 dec_hidden = enc_hidden18 dec_input = tf.expand_dims([targ_lang.word_index['<start>']], 0)1920 for t in range(max_length_targ):21 predictions, dec_hidden, attention_weights = decoder(dec_input,22 dec_hidden,23 enc_out)2425 # storing the attention weights to plot later on26 attention_weights = tf.reshape(attention_weights, (-1, ))27 attention_plot[t] = attention_weights.numpy()2829 predicted_id = tf.argmax(predictions[0]).numpy()3031 result += targ_lang.index_word[predicted_id] + ' '3233 if targ_lang.index_word[predicted_id] == '<end>':34 return result, sentence, attention_plot3536 # the predicted ID is fed back into the model37 dec_input = tf.expand_dims([predicted_id], 0)3839 return result, sentence, attention_plotxxxxxxxxxx121# function for plotting the attention weights2def plot_attention(attention, sentence, predicted_sentence):3 fig = plt.figure(figsize=(10,10))4 ax = fig.add_subplot(1, 1, 1)5 ax.matshow(attention, cmap='viridis')67 fontdict = {'fontsize': 14}89 ax.set_xticklabels([''] + sentence, fontdict=fontdict, rotation=90)10 ax.set_yticklabels([''] + predicted_sentence, fontdict=fontdict)1112 plt.show()xxxxxxxxxx81def translate(sentence):2 result, sentence, attention_plot = evaluate(sentence)34 print('Input: %s' % (sentence))5 print('Predicted translation: {}'.format(result))67 attention_plot = attention_plot[:len(result.split(' ')), :len(sentence.split(' '))]8 plot_attention(attention_plot, sentence.split(' '), result.split(' '))7. 恢复最新的检查点并进行测试

xxxxxxxxxx21# restoring the latest checkpoint in checkpoint_dir2checkpoint.restore(tf.train.latest_checkpoint(checkpoint_dir))xxxxxxxxxx11translate(u'hace mucho frio aqui.')xxxxxxxxxx21 Input: <start> hace mucho frio aqui . <end>2 Predicted translation: it s very cold here . <end>

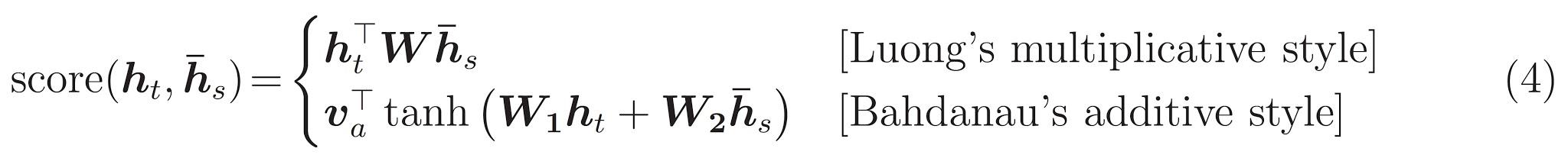

xxxxxxxxxx11translate(u'esta es mi vida.')xxxxxxxxxx21 Input: <start> esta es mi vida . <end>2 Predicted translation: this is my life . <end>

xxxxxxxxxx11translate(u'¿todavia estan en casa?')xxxxxxxxxx21 Input: <start> ¿ todavia estan en casa ? <end>2 Predicted translation: are you still at home ? <end>

xxxxxxxxxx21# wrong translation2translate(u'trata de averiguarlo.')xxxxxxxxxx21 Input: <start> trata de averiguarlo . <end>2 Predicted translation: try to figure it out . <end>

8. 下一步

- 下载不同的数据集以试验翻译,例如,英语到德语,或英语到法语。

- 尝试对更大的数据集进行训练,或使用更多的迭代周期

最新版本:https://www.mashangxue123.com/tensorflow/tf2-tutorials-text-nmt_with_attention.html 英文版本:https://tensorflow.google.cn/beta/tutorials/text/nmt_with_attention 翻译建议PR:https://github.com/mashangxue/tensorflow2-zh/edit/master/r2/tutorials/text/nmt_with_attention.md